Table of Contents

Interaction & Performance: Introductory References

This is a preliminary document collecting references for the ICMA Workgroup on Interaction and Performance.

Version on August/05/02 by M. Wanderley

Introduction

Interaction & Performance targets current trends in the design and development of interactive systems which virtually includes all types of devices and systems which a human interacts with. In the music context, it talks about three types of interaction: the Performer-System interaction (e.g. a musician manipulating an electrical instrument), the System-Audience interaction (e.g. interactive installations), and the Performer-System-Audience interaction (Bongers1999).

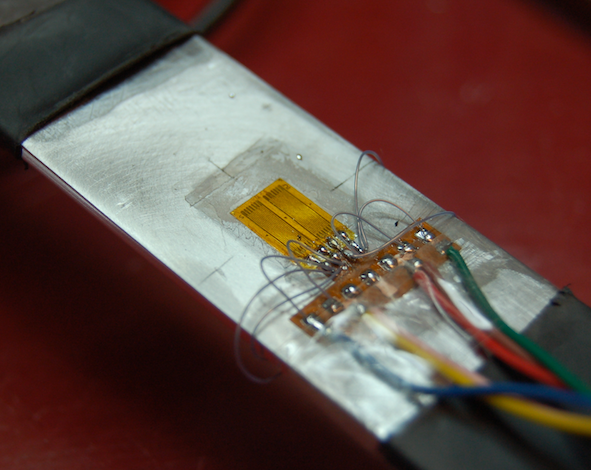

The interaction between performer and system, usually takes place by means of an interface or instrument. The interface is part of the system or machine and consists of the sensors and actuators. Sensors are the sense organs of a machine. Through its sensing inputs, a machine can communicate with its environment and therefore be controlled. Machine output takes place through actuators, such as a loudspeaker. In the case of new electronic musical instruments, like the Theremin, the Lady’s Glove, the T-Stick, the Yamaha Miburi, the Reactable, mapping algorithms and sound synthesizers also contributes to the system performance.

The interaction between system and audience, frequently involves the audience acting on the work of art or the piece responding to users’ activity, via interactive installations. There are several kinds of interactive installations that artists produce, including the CDROM-based installations, web-based installations, mobile-based installations, etc. One sound example is the Interaction Chair.

In the Performer-System-Audience interaction, both the artist and the audience are active parts of the interactive loop and the performer communicates to the audience through the system besides the direct interaction between them.

Related Disciplines

- Music

- Psychoacoustics

- Engineering and Computer Science

- Human Factors and Ergonomics

- Human Computer Interaction

Topics

- Performance Practice and Interactivity in New Musical Instruments

- Interactive Installations

- Network-based Collaborative Performance

- Live Electroacoustic Music

- Music Visualization

- Music Composition and Performance

- Multimedia Application

Design Issues

- Final Goal of the Interaction - Role of the System

- Primary Form of Interaction (aural, tactual, visual)

- Type of Sensors

- Degree of Freedom for controller

- Mapping strategies

- Sound/haptic/video synthesis methods (hardware, software)

- Available Feedback

Evaluation of Interactive Music Systems

Concepts to consider (Wanderley&Orio2002):

- Usability of Controllers: learnability, explorability, feature controllability, and timing controllability.

- Proposed Musical Tasks: musicalinstrumentmanipulation metaphor, score level metaphors, continuous feature modulation, synchronization of processes.

- Comparison with HCI Research

General Overviews and Readings

- Bongers, B. 1998. “An interview with Sensorband.” Computer Music Journal 22(1):13-24.

- Choi, I. 2000. “Gestural Primitives and the Context for Computational Processing in an Interactive Performance System.” In M. Wanderley and M. Battier, eds. Ircam - Centre Pompidou.

- Garnett, G., and C. Goudeseune. 1999. “Performance Factors in Control of High-Dimensional Spaces.” In Proceedings of the 1999 International Computer Music Conference.San Francisco, International Computer Music Association, pp. 268 - 271.

- Pressing, J. 1990. “Cybernetic Issues in Interactive Performance Systems.” Computer Music Journal 14(1):12-25.

- Ryan, J. 1991. Contemporary Music Review 6(1):3-17.

- Ryan, J. 1992. “Effort and Expresssion.” In Proceedings of the 1992 International Computer Music Conference. San Francisco, International Computer Music Association, pp. 414-416.

- Schloss, W. A., and D. A. Jaffe. 1993. “Intelligent Musical Instruments: The Future of Musical Performance or the Demise of the Performer?” Interface (Journal for New Music Research),The Netherlands, December 1993.

- Tanaka, A. 2000. “Musical Performance Practice on Sensor-based Instruments.” In M. Wanderley and M. Battier, eds. Ircam - Centre Pompidou.

- Winkler, T. 2000. “Participation and Response in Movement-Sensing Installations.” In Proceedings of the 2000 International Computer Music Conference. San Francisco, International Computer Music Association, pp. 137-140.

- Bongers, B. 1999. “Exploring Novel Ways of Interaction in Musical Performance.” In Proceedings of the 3rd Conference on Creativity & Cognition. Loughborough, United Kingdom, pp. 76-81.

- Wanderley, M.M. and Orio, N. 2002. “Evaluation of Input Devices for Musical Expression: Borrowing Tools from HCI.” Computer Music Journal. 26(3):62-76.

Complementary References

- Goudeseune, C. 2001. Composing with Parameters for Synthetic Instruments. PhD Thesis, University of Illinois at Urbana-Champaign.

- Marin-Nakra, T. 2000. Inside the Conductor's Jacket: Analysis, Interpretation and Musical Synthesis of Expressive Gesture. PhD Thesis. MIT Media Lab.

- Mulder, A. 1998. Design of Virtual Three-Dimensional Instruments for Sound Control. PhD. thesis. Burnaby, BC, Canada: Simon Fraser University.